Intel’s new Neural Processing Unit (NPU) marks a big step for AI in personal computers. This special chip helps run AI tasks faster and uses less power. The NPU comes built into Intel’s latest Core Ultra processors.

The NPU can handle up to 11 trillion operations per second (TOPS). This speed lets it run many AI features smoothly. While it’s not fast enough for some high-end AI tools, it works well with most Windows AI features. The NPU frees up the main CPU for other tasks, making the whole computer run better.

Intel designed the NPU with special parts called Neural Compute Engines and DMA Engines. These work together to speed up AI jobs. A smart software system helps the NPU work at its best. This setup makes AI run faster while using less power.

Intel NPU: Bringing AI to the Everyday

Artificial intelligence is no longer a futuristic concept. It’s here, and it’s becoming increasingly integrated into our everyday lives. Intel is at the forefront of this AI revolution, and its Neural Processing Units (NPUs) are playing a key role in making AI more accessible and powerful.

What is an NPU?

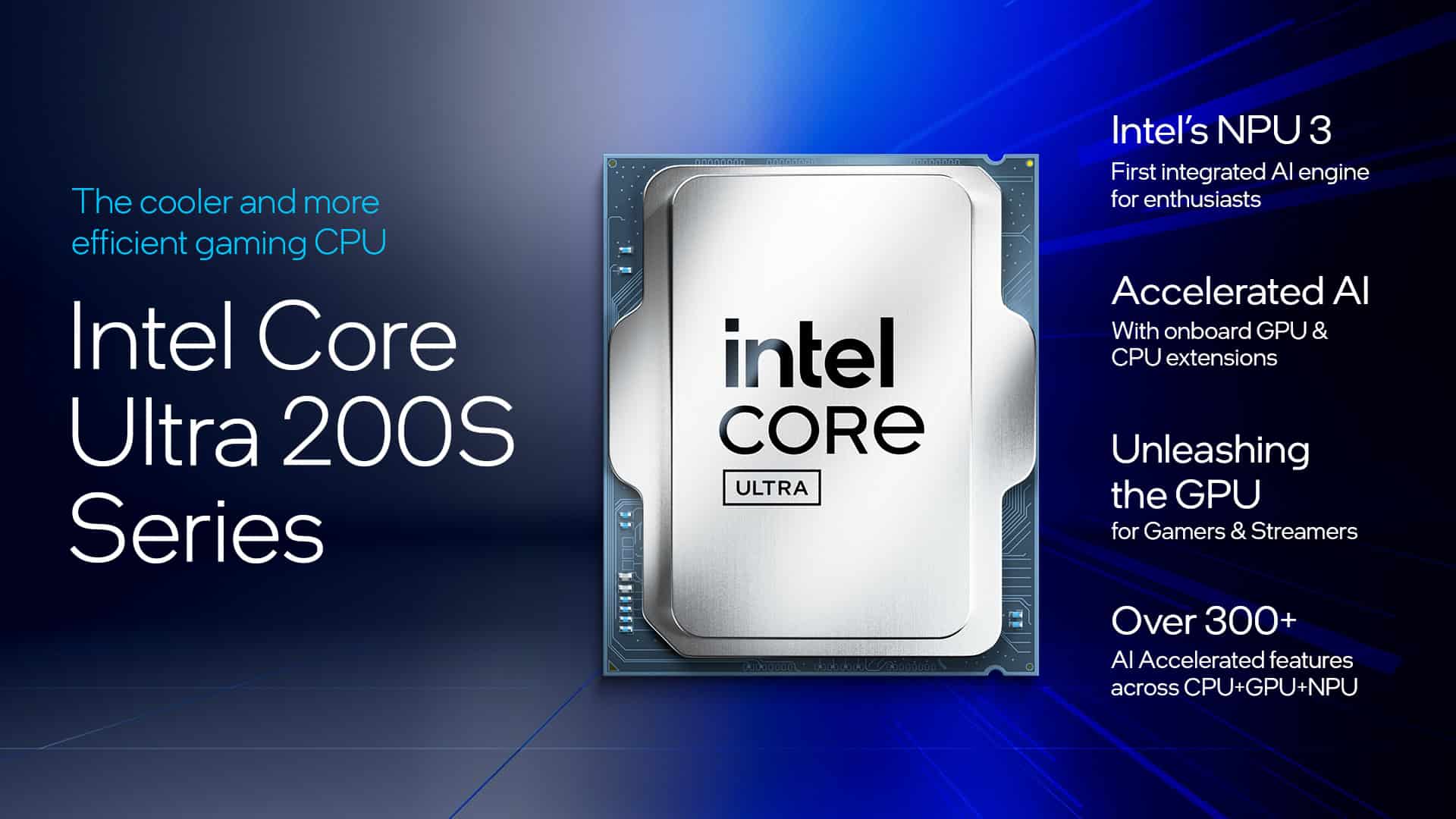

An NPU is a specialized processor designed specifically to accelerate AI tasks. It’s like a mini-brain within your computer, optimized for the complex calculations and data processing required for AI applications. Think of it as a co-processor that works alongside your CPU and GPU to handle AI workloads more efficiently.

Why NPUs Matter

NPUs offer several advantages over traditional CPUs and GPUs for AI processing:

- Dedicated AI Acceleration: NPUs are purpose-built for AI, allowing them to perform tasks like machine learning, deep learning, and neural networks much faster than a general-purpose CPU or even a GPU.

- Power Efficiency: NPUs are incredibly power-efficient, making them ideal for laptops and mobile devices where battery life is crucial.

- Improved Performance: By offloading AI tasks to the NPU, your CPU and GPU are free to handle other tasks, leading to improved overall system performance.

Intel’s NPU Journey

Intel has been steadily integrating NPUs into its processors, starting with Meteor Lake. These integrated NPUs bring AI acceleration to everyday laptops and desktops, enabling new experiences and capabilities.

- Meteor Lake: Marked the debut of Intel’s integrated NPUs, bringing AI to the mainstream.

- Arrow Lake: The upcoming Arrow Lake processors will feature even more powerful NPUs, further enhancing AI capabilities.

- Lunar Lake: Expected in late 2024 or early 2025, Lunar Lake will feature a significantly more powerful NPU, codenamed NPU 4, promising a major leap in AI performance.

Intel NPU Roadmap

| Processor Generation | NPU Codename | Key Features |

|---|---|---|

| Meteor Lake | NPU 2.7 | First integrated NPU, basic AI acceleration |

| Arrow Lake | NPU 3.0 | Improved performance and efficiency |

| Lunar Lake | NPU 4.0 | Significant performance leap, advanced AI capabilities |

The Future of Intel NPUs

Intel is committed to advancing its NPU technology, exploring new use cases and applications for AI acceleration. We can expect to see even more powerful and versatile NPUs in the future, enabling new experiences in areas like image and video processing, natural language processing, and more. Intel’s NPUs are paving the way for a future where AI is seamlessly integrated into every aspect of our computing experience.

Key Takeaways

- Intel’s NPU is a new chip that speeds up AI tasks in personal computers

- The NPU comes built into Intel’s latest Core Ultra processors

- Intel’s NPU can handle 11 trillion operations per second for most AI features

Overview of Intel NPU

Intel’s Neural Processing Unit (NPU) is a specialized AI accelerator built into Core Ultra processors. It boosts AI workload performance while reducing power use.

Architecture and Components

The NPU uses Neural Compute Engines for fast math operations. These engines handle matrix multiplication and convolution tasks. The NPU also has Streaming Hybrid Architecture Vector Engines. These help with data movement and processing.

DMA engines move data between system memory and the NPU. This speeds up AI tasks. The NPU has its own managed cache and device MMU. These parts work with the system IOMMU for security.

The NPU uses scratchpad SRAM for quick data access. It can also use system DRAM when needed. All these parts work together to run AI models fast and efficiently.

Software and Compilation

Intel provides tools to use the NPU. These include a Python library that works with PyTorch. Developers can use familiar AI frameworks with the NPU.

The NPU supports TorchCompile. This tool optimizes AI models for better performance. It can improve compute use and speed up inference.

Intel’s compiler tech helps run AI tasks on the NPU. It can handle various AI workloads. This includes language models and other AI apps.

The software stack makes it easier to use the NPU. Developers can focus on their AI work without worrying about hardware details.

FAQs

How to Check If My Intel® Processor Has an Integrated Neural Processing Unit (NPU)

To determine if your Intel processor has an integrated NPU, you can use the Intel® Processor Identification Utility. This tool provides detailed information about your processor, including its features and capabilities. Download and run the utility, then look for a section that lists the processor’s features. If your processor has an integrated NPU, it will be listed there, often as “Intel® Gaussian & Neural Accelerator” or similar. You can also check Intel’s Ark website (ark.intel.com) for your processor model’s specifications. NPUs are relatively new, so generally, only the latest Intel CPUs will have them.

What is Intel NPU?

An Intel NPU (Neural Processing Unit) is a specialized processor designed to accelerate artificial intelligence (AI) tasks. It’s like a mini-brain within your computer, optimized for the complex calculations and data processing required for AI applications. Intel integrates NPUs directly into its CPUs, making AI acceleration more accessible and seamless for users. These NPUs are highly power-efficient and significantly boost performance in AI-powered applications compared to relying solely on the CPU or GPU.

What does NPU mean?

NPU stands for Neural Processing Unit. It’s a type of specialized processor designed specifically to accelerate artificial intelligence (AI) tasks. NPUs are optimized for the complex calculations and data processing required for AI applications like machine learning, deep learning, and neural networks.

Is NPU better than GPU?

NPUs and GPUs are both good at processing data, but they excel in different areas. NPUs are specifically designed for AI tasks, while GPUs are more general-purpose and better suited for graphics-intensive tasks like gaming and video editing. For AI applications, NPUs generally offer better performance and power efficiency than GPUs. However, GPUs still hold the edge for tasks that require massive parallel processing, like rendering complex 3D scenes.

Is NPU worth it?

Whether an NPU is “worth it” depends on your needs and how you use your computer. If you frequently use AI-powered applications or are interested in exploring the world of AI, an NPU can provide a significant performance boost and enhance your experience. As AI becomes more prevalent in everyday applications, NPUs will likely become increasingly valuable for a wider range of users.

What is an example of a NPU?

Intel’s integrated NPUs found in Meteor Lake and Arrow Lake processors are examples of NPUs. These NPUs accelerate AI tasks within the CPU itself, enabling features like AI-enhanced video and image processing, faster machine learning, and more efficient AI inference. Other examples include Google’s Tensor Processing Units (TPUs) used in their data centers and Apple’s Neural Engine found in their iPhones and iPads.