TPUs and NPUs are changing the game in AI hardware and these special chips make AI tasks faster and more efficient. TPUs and NPUs can do AI jobs much better than regular computer parts.

TPUs, or Tensor Processing Units, are great for big machine learning tasks. Google uses them a lot in their cloud systems. NPUs, or Neural Processing Units, shine in edge computing. They work well in phones and other small devices that need to do AI tasks without using much power.

Both TPUs and NPUs are built just for AI work. They can do the math needed for AI very quickly. This helps make AI apps run smoother and faster. As AI grows, these chips will become even more important.

CPU vs GPU vs TPU vs NPU

The Processor Puzzle: CPUs, GPUs, TPUs, and NPUs

Modern computing relies on a diverse range of processors, each optimized for specific tasks. Understanding the differences between CPUs, GPUs, TPUs, and NPUs is crucial for navigating the evolving landscape of artificial intelligence and high-performance computing.

CPUs: The Generalists

Central Processing Units (CPUs) are the workhorses of our computers. They’re designed for general-purpose computing, handling a wide variety of tasks from word processing to web browsing. CPUs excel at sequential processing, executing instructions one after another. While versatile, they aren’t the most efficient for the parallel computations required by AI.

GPUs: The Parallel Powerhouses

Graphics Processing Units (GPUs) were originally developed for rendering images and videos. Their parallel architecture, with thousands of cores working simultaneously, makes them ideal for tasks that can be broken down into smaller, independent parts. This parallel processing power makes GPUs well-suited for machine learning, particularly training deep learning models.

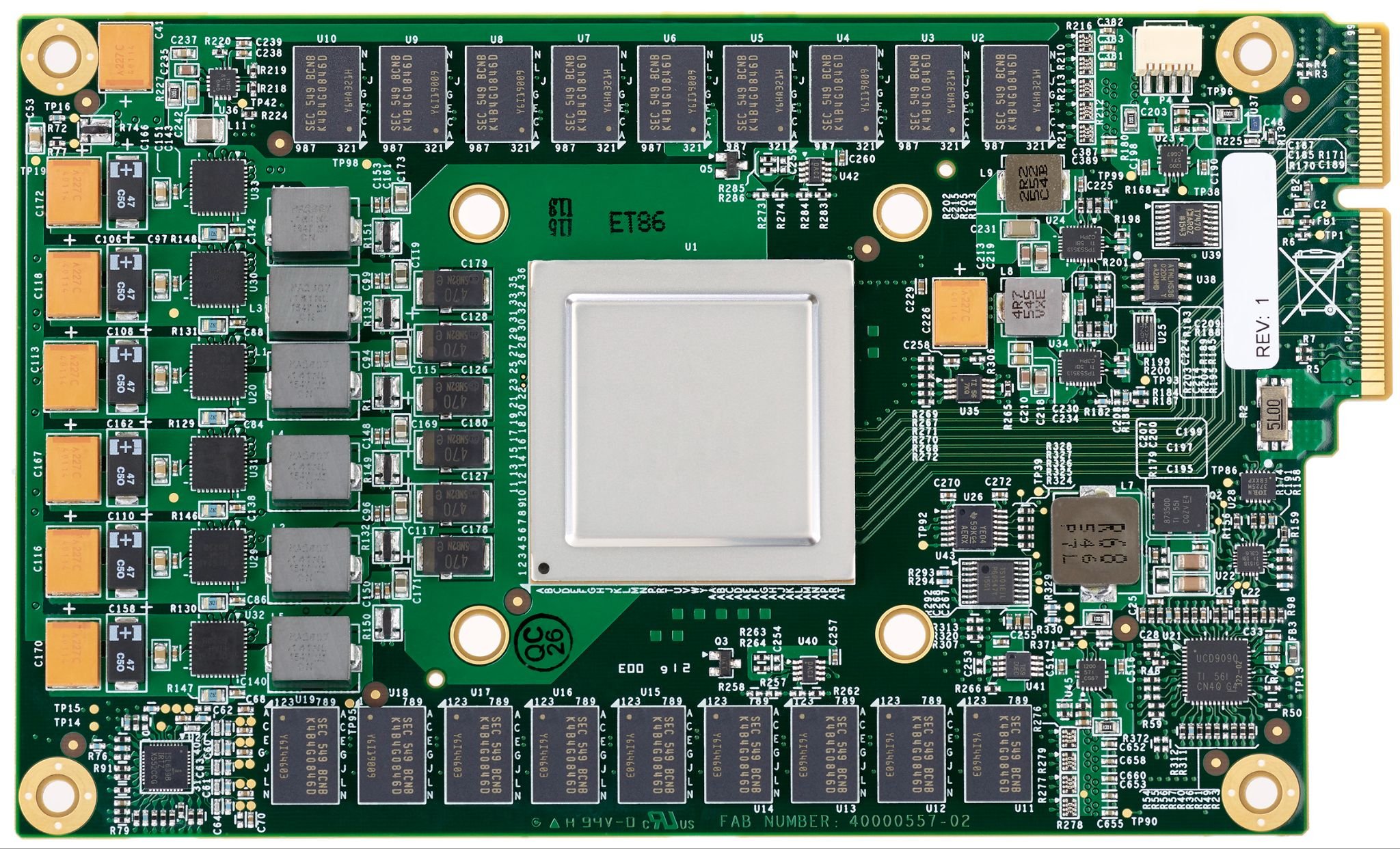

TPUs: AI Accelerators

Zinskauf, CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0, via Wikimedia Commons

Tensor Processing Units (TPUs) are specialized processors designed specifically for machine learning workloads. Developed by Google, TPUs excel at matrix operations, which are fundamental to deep learning. They are optimized for the large-scale computations required for training complex AI models in the cloud. TPUs are particularly efficient for TensorFlow, Google’s open-source machine learning framework.

NPUs: Edge AI Specialists

Neural Processing Units (NPUs) are designed for efficient AI processing on edge devices like smartphones, wearables, and IoT gadgets. They prioritize energy efficiency and real-time performance, enabling AI-powered features like facial recognition, natural language processing, and personalized recommendations directly on the device. NPUs are optimized for specific neural network architectures, enabling faster inference with minimal power consumption.

Comparing the Four

Each type of processor has its strengths and weaknesses, making it suitable for different applications.

| Processor | Strengths | Weaknesses | Typical Use Cases |

|---|---|---|---|

| CPU | General-purpose computing, sequential processing | Not ideal for parallel processing, slower for AI tasks | Everyday computing tasks, operating systems |

| GPU | Parallel processing, fast for many AI tasks | Less energy-efficient than NPUs, not optimized for all AI workloads | Gaming, video editing, machine learning training |

| TPU | Optimized for deep learning, high-performance for large-scale AI | Specialized for specific AI tasks, primarily used in the cloud | Cloud-based AI services, large-scale machine learning |

| NPU | Energy-efficient, real-time AI processing on edge devices | Limited to specific neural network architectures | Smartphones, wearables, IoT devices |

The Future of Processing

The world of processors is constantly evolving. We can expect to see continued advancements in all four types, with each becoming more specialized and efficient. The rise of AI will likely drive further innovation in TPUs and NPUs, leading to even more powerful and energy-efficient AI-powered devices and services. Hybrid approaches, combining different types of processors, may also become more common, allowing systems to adapt to a wider range of tasks.

AI’s Hardware Heroes: TPUs and NPUs

What are TPUs and NPUs?

Think of TPUs and NPUs as the brains behind AI’s rapid growth. They’re special computer chips designed just for machine learning. TPUs (Tensor Processing Units), made by Google, are powerful workhorses, great for big, complicated AI tasks. NPUs (Neural Processing Units) are more like nimble sprinters, perfect for AI on smaller devices like phones.

TPUs: Big AI’s Best Friend

TPUs shine when handling massive amounts of data in cloud computing. They’re what make Google’s AI services, like language translation and image recognition, so fast. Demand for TPUs is rising as more companies rely on cloud-based AI. This increased use could shift the balance of power in the AI chip market.

NPUs: AI on the Go

NPUs are bringing AI to our pockets and homes. They power features like facial recognition on your phone and make smart home devices, well, smart. Companies like Apple and Qualcomm are packing more powerful NPUs into their devices, leading to a boom in mobile AI capabilities.

Why are TPUs and NPUs Important?

These chips are critical for the future of AI. As AI becomes more common, we’ll need faster and more efficient ways to process information. TPUs and NPUs make this possible.

Speed and Efficiency

Both types of processors are built for speed. They can perform the complex calculations needed for AI much faster than regular computer chips. NPUs also focus on energy efficiency, which is important for extending battery life in phones and other small devices.

Expanding AI’s Reach

TPUs and NPUs are pushing AI into new areas. We’re seeing them in self-driving cars, robots, and even in healthcare. The possibilities seem endless.

The Future of AI Hardware

The future looks bright for these specialized chips. They’ll likely become even more powerful and efficient. We’ll also see them in more and more devices, from tiny sensors to powerful servers.

Continued Innovation

Expect to see constant improvements in both TPU and NPU technology. Faster processing speeds and lower energy consumption are key goals.

Wider Adoption

NPUs will likely become standard in most consumer devices. This means more AI-powered features in our everyday lives.

New Frontiers

TPUs and NPUs will play a big role in emerging technologies like augmented reality and advanced robotics.

Key Players in the TPU/NPU Arena

Several companies are leading the charge in developing these AI accelerators.

| Company | Focus |

|---|---|

| TPUs for cloud computing | |

| Qualcomm | NPUs for mobile devices |

| Apple | NPUs for mobile devices and computers |

The AI revolution isn’t just about software; it’s about the hardware too. TPUs and NPUs are the unsung heroes powering AI’s incredible progress and shaping its future.

Key Takeaways

- TPUs and NPUs are special chips made for AI tasks

- These chips make AI apps run faster and use less power

- TPUs and NPUs are key to the future of AI in big systems and small devices

Understanding TPUs and NPUs

The growth of AI applications depends on special hardware. While algorithms and models get a lot of attention, it is the hard work of Tensor Processing Units (TPUs) and Neural Processing Units (NPUs) that really drives the fast progress of AI. These chips are specifically designed for machine learning tasks and power everything from large cloud services to the smart devices we use every day.

TPUs and NPUs are special chips made for AI tasks. They work faster than regular computer parts for certain jobs. These chips help run complex AI programs and make devices smarter.

Architecture and Design

TPUs use a systolic array design. This setup lets them do many math tasks at once. They excel at matrix math, which is key for AI. NPUs have a different layout. They focus on neural network tasks. Both chips have parts that speed up AI work.

TPUs can handle big AI models well. They shine in data centers and cloud services. NPUs fit better in small devices. You’ll find them in phones and smart home gadgets.

Performance Metrics

TPUs and NPUs are very fast for AI tasks. They beat regular CPUs by a lot. TPUs can do trillions of AI operations per second. NPUs are quick too, but often on a smaller scale.

These chips use less power than other options. This matters for battery life in phones. It also cuts costs in big data centers. TPUs and NPUs get more done with less energy.

Speed isn’t the only factor. These chips also reduce delay in AI responses. This is crucial for real-time apps like self-driving cars.

Comparison with Other Processors

GPUs were the first choice for many AI tasks. They’re still common and very flexible. TPUs and NPUs are more focused on AI work. This makes them faster for those specific jobs.

CPUs can do AI tasks but much slower. They’re good for general computing. FPGAs offer a middle ground. They can be changed for different AI needs.

Here’s a quick comparison:

| Processor | Flexibility | AI Performance | Power Use |

|---|---|---|---|

| CPU | High | Low | High |

| GPU | High | Good | High |

| TPU | Low | Excellent | Low |

| NPU | Medium | Very Good | Very Low |

Industry Applications

Healthcare uses these chips to process medical images fast. This helps doctors spot issues quicker. Self-driving cars need quick AI decisions. TPUs and NPUs make this possible.

Phones with NPUs can do cool AI tricks. Think better photos and voice commands. Smart homes use them for fast responses to your needs.

Big tech companies use TPUs for their online services. This makes web searches and translations super fast. Robots in factories use NPUs to work more like humans.

These chips are pushing AI forward in many fields. They’re making machines smarter and faster every day.

Implementation and Impact

TPUs and NPUs are changing how AI works. They make AI faster and use less power. This affects many tech areas and brings new challenges.

Integration in Technology Ecosystems

TPUs and NPUs are part of many tech systems. Google uses TPUs in its cloud platform for AI tasks. Microsoft and Huawei put NPUs in their devices. These chips work with TensorFlow and other AI tools. They speed up tasks like running BERT models.

Data centers now often have TPUs or NPUs. This helps them handle more AI work. Cloud platforms offer these chips to customers. This lets more people use advanced AI.

Edge devices are starting to use NPUs too. This brings AI power to phones and smart home gadgets. It helps AI work without always needing the cloud.

Impact on AI Improvement

TPUs and NPUs make AI faster and better. They can run bigger neural networks. This leads to smarter AI models.

These chips are great at matrix math. That’s key for many AI algorithms. They can do this math much quicker than regular CPUs.

The speed boost helps researchers try new ideas faster. It also lets companies use more complex AI in their products. This drives progress in fields like natural language processing and computer vision.

Challenges and Considerations

Cost is a big issue with TPUs and NPUs. They’re often pricey, which can limit who can use them. Availability can be a problem too. Not all cloud providers offer these chips.

Power use is tricky. While TPUs and NPUs are more efficient than GPUs for AI, they still use a lot of power. This matters for data centers and mobile devices.

Memory access can be a bottleneck. AI models need lots of data, and getting it to the chip fast enough can be hard.

Flexibility is another concern. TPUs are great for specific tasks but may not work well for new AI methods. NPUs try to be more flexible, but it’s an ongoing challenge.